For More Information

The Second Language Proficiency Test (SLPT) is an educational resource created by the Collegial Centre for Educational Materials Development (CCDMD) for the Québec college network.

The CCDMD’s mandate is to ensure that students throughout the network have access to quality educational materials in both French and English, and to contribute to the development of such materials at the different stages of production. The CCDMD produces materials for students in a wide range of courses and programs as well as documents intended specially to help them improve their French and English language skills.

These services are funded by the Québec Ministère de l’Éducation, de l’Enseignement supérieur et de la Recherche and, in some cases, benefit from support under the Canada-Québec Agreement on Minority-language Education and Second Language Instruction.

Colleges wishing to use this test must subscribe for it. Anyone wishing to use it for commercial purposes must enter into a specific agreement with the CCDMD. To become a subscriber or to obtain more information, please contact the CCDMD by email at info@ccdmd.qc.ca or by telephone at (514 873-2200).

Background

Initially, the project was implemented by the Ministère de l’Éducation, du Loisir et du Sport (MELS) through its 2004-2008 second language action plan, when it agreed to develop, along with the Official Languages Program, a scale of second language proficiency levels and the related evaluation mechanisms. The general objective of the project was to improve the second language proficiency of college graduates, as recommended by the Commission d’évaluation de l’enseignement collégial (CEEC).

The project led to a long collaboration between the members of the coordinating committees responsible for the production of French and English tests: a large number of teachers and professionals in the French and English CEGEP networks participated in the first phase of development of the linguistic scales and items associated with the resulting tests.

In the fall of 2012, the Collegial Centre for Educational Materials Development (CCDMD) was entrusted with a new mandate to develop the second language proficiency test. In 2012-2013, the CCDMD consulted some twenty professionals and experts in the college and university networks. It set up a production team, with project managers, content specialists, programmers and technicians.

When the technical analysis was concluded, the CCDMD proposed an adaptive test model and a linguistic scale based on new criteria.

Educational Objectives

The primary objective of the Second Language Proficiency Test (SLPT) is to determine the proficiency level of the respondent in order to propose appropriate courses as well as tailored learning activities within those courses. When the respondent finishes the test, the result is given as a number from 1 to 10 and corresponds to a level on the Scale of Second Language Proficiency Levels for the College Student. The teacher and student can then consult the proficiency level descriptions for information on what the student is able to do at that level. The teacher can also use this information to plan learning activities to help the student progress to the next level. As a result, the SLPT goes further than a traditional placement test designed strictly to assign a student to a course level according to his or her results. Nonetheless, the head of a subscribing college has the option, in the management module, of associating a course number with each of the 10 levels.

The 10 levels in the Scale of Second Language Proficiency Levels for the College Student are closely related to the levels in other recognized international scales. The proficiency of students taking an upgrading (mise à niveau) course is at about level 1. A level 10 result means that the student may be more proficient than the maximum level measured by the test. Ideally, college graduates should reach level 8: subsequent university training should enable students to fine-tune their proficiency and achieve level 9 or higher during their university studies. Students registered in some ACS (attestation of collegial studies) programs would be well advised to achieve this level of proficiency so they can pass the OLF (Office de la langue française) examination for their profession.

Features

The Scale of Second Language Proficiency Levels for the College Student includes 10 levels. The SLPT is made up of two categories of items: written comprehension and oral comprehension. However, the platform can accommodate two additional categories, if required.

There are six types of items: associations, multiple choice, sequencing, multiple answers, cloze text with drag and drop, and cloze text with menus. Each item is accompanied by a timer, a volume control slider bar (oral comprehension), and an interactive button enabling the respondent to go to the next item. The items are presented in a random manner. Each item includes:

- general instructions, provided in words and images;

- a text (written comprehension) or audio recording (oral comprehension);

- a question;

- a choice of answers.

The SLPT is adaptive. Each answer, correct or incorrect, affects the progression of the test, so that the respondent’s proficiency level can be determined quickly and efficiently. The platform can also operate in non-adaptive mode, so that the items can be tested and calibrated. However, the performance of the SLPT and the reliability of the results, in particular, depend on the interaction of the platform with appropriate content that is carefully targeted, finely calibrated, associated with predefined levels of difficulty, and classified in relevant categories.

Sample Item Types

There are six item types:

- Associations

Drag and drop the answer choices to form pairs. - Multiple choice

Select the correct answer from a list of possible answers. - Sequencing

Drag and drop the answer choices in the correct order to form a logical text. - Multiple answers

Select all of the correct answers from a list of possible answers. - Cloze text with drag-and-drop

Drag and drop the correct answers into the blank spaces. - Cloze text with menus

Select the correct answer from a list of possible answers.

The answers to the following questions can be found in this text:

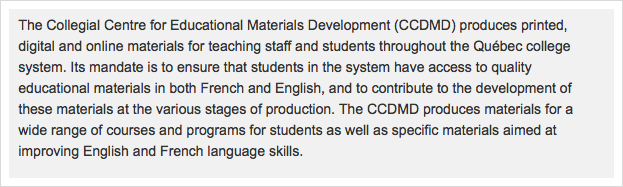

ASSOCIATIONS: Drag and drop the answer choices to form pairs.

Respond to an association question by pairing each of a set of elements in the 2nd column with one of the choices provided in the 1st column. Association questions are used to assess recognition and recall of related information. The response is deemed correct when the respondent has successfully matched all of the elements.

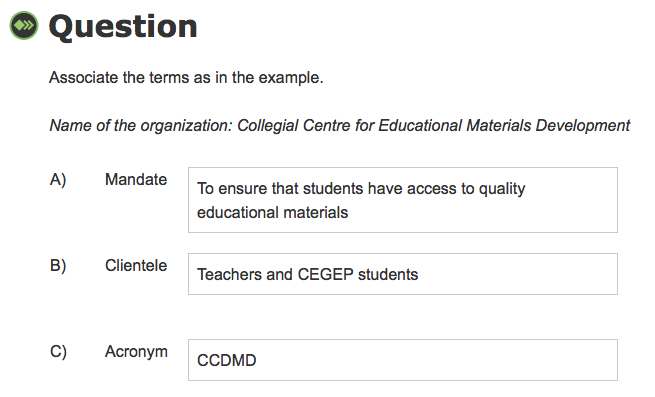

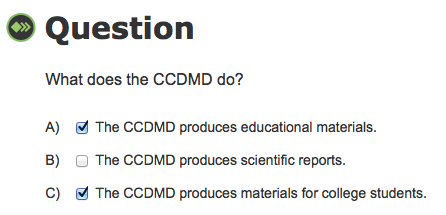

MULTIPLE CHOICE: Select the correct answer from a list of possible answers.

Respond to a multiple choice question by selecting the correct answer from a list that includes the correct answer and several incorrect answers. Multiple choice questions are used to test comprehension of a broad range of content. The context of the item and the type of response required determines whether the item is recall or interpretation. The response is deemed correct when the respondent has selected the single correct answer.

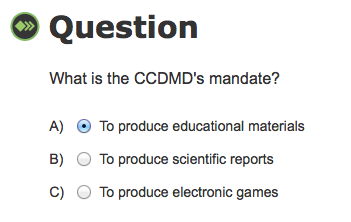

SEQUENCING: Drag and drop the answer choices in the correct order to form a logical text.

Respond to a sequencing question by dragging the elements into the correct order. Sequencing questions are used to test the ability to recall a series of events or a process in the order in which it was presented. They measure the ability to construct meaning and comprehend logical consequences expressed in verbal or written form. The response is deemed correct when the respondent has arranged all of the elements in the correct order.

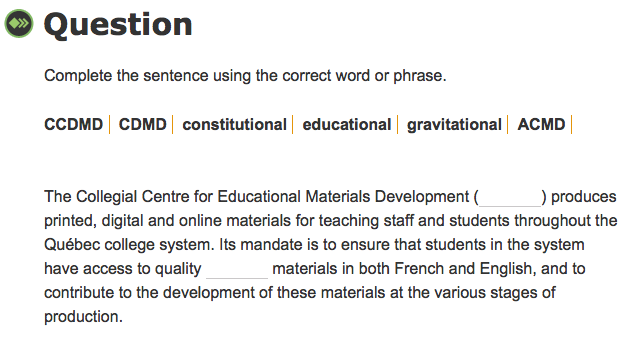

CLOZE TEXT: Drag and drop the correct answers into the blank spaces.

Respond to a cloze text with drag-and-drop question by filling in the blanks in a text. Drag the correct answer from a predefined set of possible answers above the text to the appropriate blank in the text. Cloze text with drag-and-drop questions are used to assess language skills by imitating the editing process. They measure the ability to infer the most appropriate conclusion to an incomplete statement. The response is deemed correct when the respondent has filled in all of the blanks correctly.

CLOZE TEXT: Select the correct answer from a list of possible answers.

Respond to a cloze text with menus question by filling in the blanks in a text. Select the correct answer from a predefined list of possible answers. Cloze text with menus questions are used to assess the ability to compare options and make accurate choices. Cloze text with menus questions are simpler to decode than cloze text with drag-and-drop, as they narrow the range of correct answers. The response is deemed correct when the respondent has selected the single correct answer.

Accessibility

The educational design of the SLPT is based on principles of universal accessibility. The written instructions for each item, intended to guide the respondent in his or her approach, are accompanied by visual instructions. Thus, a respondent who is not sufficiently proficient to read the written instructions should be able to understand the task and answer the question. Visual instructions are provided for all items, including those in the advanced levels. This strategy creates a predictable virtual environment suitable for various types of learning.

Images have been included with the text or audio recording in the majority of items in proficiency levels 1 to 3. In some cases, the answer choices consist of numbers, images or even colours. An alternative text for each audio recording and image has been provided for in the editor.

Accommodation for Students with Special Needs

Since the objective of the SLPT is to determine reading and listening proficiency, no writing support measure (screen reader) or oral support measure (audio transcription) has been provided for in the test. The allotted answer time for each item is regulated by the administrator.

The test is an objective measure of second language proficiency throughout the college network. We thus ask educational institutions not to adapt it, as we wish to avoid distortion of the data collected.

The CCDMD development team would be pleased to discuss the development of an untimed replacement test, hosted on a separate virtual environment, to meet the needs of colleges having to accommodate groups of students with special needs.

The CCDMD can be reached at 514 873-2200 or info@ccdmd.qc.ca.

Administering the Test

The SLPT is intended to be administered twice during a student’s college studies: once at the beginning and once before the diploma is awarded. The head of a subscribing college may decide to use it differently: the test can be used as a placement test under certain conditions or as a test within a language course. However, since students do not progress rapidly to a higher level of proficiency, it is recommended that students not take the test numerous times during their studies.

The subscription manager can create groups and define the testing schedule from the management module. The manager has access to both the overall results and detailed data and can decide whether or not to display the respondent’s results on screen at the end of the test.

The test can be taken online from home, in class or in a lab. Students can access the test using an ID and a password sent by the test manager. The monitoring of tests administered in class or in a lab is facilitated by the fact that the test progression (the type and order of items) varies with the respondent. Similarly, the correct answer is not always in the same order in the list of answer choices.

Scale of Proficiency Levels

The scale used for the SLPT was developed on the basis of a comparison with three recognized scales in Québec: the Échelle québécoise des niveaux de compétence en français des personnes immigrantes adultes, the Quebec College ESL Benchmarks (provisional version), and the Échelle des niveaux de compétence en français langue seconde pour le collégial (provisional version). These benchmark scales include 12 levels.

For this project, only the first 10 levels were taken into account, since the proficiency level of the target audience generally ranges from 1 to 10. The SLPT scale development team selected indicators whose content was common to that of at least two scales. The team subsequently chose other indicators that were consistent with and complementary to the ones initially selected. It later added descriptions to the scale from the Canadian Language Benchmarks. Then 147 passages and 302 items were prepared to delimit the scale indicators and make up the proficiency test.

Importance of a Scale of Proficiency Levels

The interpretation of a proficiency level result based on a scale offers a number of advantages:

- The information obtained is standardized and can thus be compared with that derived from other tests and scales developed according to the same principles;

- The proficiency levels in the scale provide clear, practical information that can be used to analyze programs and develop their content with a view to standardizing second language services at the college level;

- These proficiency levels offer clear, practical information that can be used to formulate the learning objectives to be achieved, thus enabling students to prepare for tests and progress from one level to the next.

Proficiency Level Descriptions

The Scale of Second Language Proficiency Levels for the College Student describes the development of second language proficiency in 10 levels. For each level, it defines what the learner is able to understand orally and in writing.

The proficiency test is made up of a number of items divided into two categories:

- Items that focus on texts and measure the respondent’s degree of proficiency in written comprehension;

- Items that focus on audio recordings and measure the respondent’s degree of proficiency in oral comprehension.

NOTE: For a description of each of the proficiency levels, please refer to the complete list contained in the Management and Editing Help Guides. A summary version of the levels is also provided for college respondents in the Student Guide.

Test Types

The SLPT is adaptive. Each answer, whether correct or incorrect, affects the progression of the test, so that the respondent’s proficiency level is determined quickly and efficiently.

The platform can also operate in non-adaptive mode, so that items can be tested and calibrated.

Adaptive Test

The administration of an adaptive test is non-linear. The way in which the learner answers the first questions, whether correctly or incorrectly, determines the progression of the test. As the test is administered, it dynamically selects, from a bank of approved and calibrated items, the item most likely to assess the respondent’s skill level in light of his or her preceding answers. The answers are analyzed as the respondent gives them, and tasks are selected progressively according to the answers; the assessment of the respondent’s proficiency level is thus refined quickly and efficiently. The test ends when the proficiency level is determined. An adaptive test, in comparison with a non-adaptive test, makes it possible to reduce the number of items presented, decrease the test administration time and increase the reliability of skill assessment.

The results for the adaptive test vary between -3 and +3. For purposes of interpretation, these results (called “thetas”) are matched with the language proficiency scale levels. In the Cut scores section, the proficiency levels are identified and the thetas corresponding to each level are defined.

The test is generally used in non-adaptive mode so that a representative sample of respondents can go through a representative number of approved items (say, about a hundred). The items can then be calibrated, i.e., a difficulty value on a scale of -3 to +3 can be obtained. Once the test includes about a hundred calibrated items, it can be used in adaptive mode. An automatic calibration mechanism for new items is included in adaptive mode: each respondent is given a certain number of non-calibrated items in each category (for example, two per category), in addition to the items chosen to measure his or her language skill. The results for these items will not be used in the assessment of skill. Once a certain number of respondents (say, 150) have answered a given non-calibrated item, the administrator will calibrate the item, by clicking on the Calibrate button in the Calibration section.

Non-adaptive Test

The administration of the non-adaptive test is linear, with a predetermined number of questions to which the learner must respond. The test ends when the bank of questions is used up.

The test in non-adaptive mode randomly presents all items created and approved in the editing module. The non-adaptive mode is used essentially to calibrate items, i.e., to determine their level of difficulty by having a representative sample of respondents take the test. In this mode, the respondent’s results are not calculated in thetas, but according to the number of correct answers out of the number of items presented.

Production Team

Application Design

Educational Design and Drafting

Project Management

Test Evaluation

Translation

Drafting of Implementation Guide

Language Editing

|

System Design and Programming

Web Integration

Graphic Design

Audio Production

Voice

|

AcknowledgmentsWe at CCDMD wish to thank the following individuals and groups: Deborah Armstrong, Rebecca Baker, Chantal Bélanger, Kathye Bélanger, Philippe Bonneau, Marie-Pierre Bouchard, Yvonne Christiansen, Marie-Claude Doucet, Cathie Dugas, Paul Fournier, Philippe Gagné, Sylvain Gagnon, Francine Gervais, Sue Harrison, Réjean Jobin, André Laferrière, Charles Lapointe, James Laviolette, Julia Lovatt, Michel D. Laurier, Susan MacNeil, Anne McMullon, Jean-Denis Moffet, Andrew Moore, Joanne Munn, Ian Murchison, Colette Noël, Patrick Peachey, Christian Ragusich, Michael Randall, Pierre Richard, Joan Thompson, Rachel Tunnicliffe and Inèse Wilde, as well as

This work is dedicated to the many teachers who contributed in one way or another to the production of a fair and reliable second language proficiency test that is fitting for young students in Québec. This project is funded by the Québec Ministère de l’Éducation, de l’Enseignement supérieur et de la Recherche and the Canada-Québec Agreement on Minority-language Education and Second Language Instruction. |